AWS recently announced “Amazon RDS Snapshot Export to S3” feature wherein you can now export Amazon Relational Database Service (Amazon RDS) or Amazon Aurora snapshots to Amazon S3 as Apache Parquet, an efficient open columnar storage format for analytics.

I had a use-case to refresh Athena tables daily with full data set in Account B(us-east-1) from Aurora MySQL database running under Private subnet in Account A (us-west-2). The two solutions I could think of was –

- Have EC2 instance running in Public subnet to act as bridge to the Aurora instance and configure SSH Tunnel to pull the data to S3 using python script.

- Use the newly released RDS snapshot export to S3 feature creating a server-less solution.

I used this new feature with cross-region replication enabled for S3 bucket to replicate the data to S3 bucket in Account B.

In this post, I will go through the steps to have the data into staging bucket of Account B and few issues I faced during this setup –

1. Setup Cross-Region replication between the Source S3 bucket in Account A(us-west-2) and Destination Bucket in Account B(us-east-1).

I created a new S3 Bucket and navigated to Replication under Management tab. For both the source and destination S3 buckets versioning needs to be enabled. If you want to replicate objects encrypted with AWS KMS make sure to enable the check box under Replication criteria.

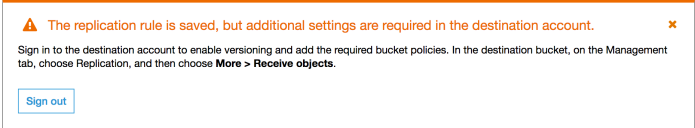

You will be asked to add both, the required S3 bucket policy and KMS policy, in to the destination account along with enabling “Receive Objects” in destination bucket.

2. Now lets look at the Lambda Function design –

The CloudWatch Event is scheduled to trigger once per day

Cron expression 30 10 ? * MON-SUN *

You would observe “Layers (1)” attached with the lambda function in the diagram above. So what are AWS Lambda Layers? A layer is a ZIP archive that contains libraries, a custom runtime, or other dependencies.

The layer was added in lambda function to avoid below error –

response = client.start_export_task(

ExportTaskIdentifier=export_task,

SourceArn=get_latest_snapshot_name,

S3BucketName=s3_bucket,

IamRoleArn=iam_role,

KmsKeyId=kms_key,

ExportOnly=tables

)

Response:

{

"errorMessage": "'RDS' object has no attribute 'start_export_task'",

"errorType": "AttributeError",

"stackTrace": [

" File \"/var/task/lambda_function.py\", line 48, in instantiate_s3_export\n response = client.start_export_task(\n",

" File \"/var/runtime/botocore/client.py\", line 516, in __getattr__\n self.__class__.__name__, item)\n"

]

}

The error is because lambda used older version of boto3 and botocore. To avoid this I had to add layer for boto3-1.11.14 and botocore-1.14.14.

So how do you add layer?

https://docs.aws.amazon.com/lambda/latest/dg/configuration-layers.html#configuration-layers-path

Follow the below steps to include the latest Boto3 SDK as a layer (Perform the following steps on your Local machine or Linux Ec2 instance(recommended))

- Create folder named ‘python’: mkdir python

- Navigate to ‘python’ folder: cd python

- Instal ‘boto3’ inside the ‘python’ folder: pip install boto3 -t /home/ec2-user/python/

- Navigate back to previous dictionary: cd ..

- Zip the ‘python’ folder: zip -r layer.zip python/

- Go to Lambda console –> Layers –> Create layers

- Configure the layer (upload the .zip file)

- Add the layer to function

I wrote a simple lambda function to get the latest system snapshot and invoke start_export_task method to initiate export to s3.

import os

import json

import boto3

from datetime import datetime

def get_db_snapshot():

"""

Funtion to get the latest snapshot

Returns: Latest snapshot

"""

db_cluster_id = os.environ["DB_CLUSTER_ID"]

client = boto3.client("rds")

desc_cluster_snapshots = client.describe_db_cluster_snapshots(

DBClusterIdentifier=db_cluster_id,

SnapshotType="automated"

)

db_snapshots = {}

for snapshot in desc_cluster_snapshots["DBClusterSnapshots"]:

db_snapshots.update([(snapshot["DBClusterSnapshotArn"], snapshot["SnapshotCreateTime"])])

return (max(db_snapshots.items()))

def jsondatetimeconverter(o):

"""To avoid TypeError: datetime.datetime(...) is not JSON serializable"""

if isinstance(o, datetime):

return o.__str__()

def instantiate_s3_export(event, context):

"""

Function to invoke start_export_task using

recent most system snapshot

Return: Response

"""

s3_bucket = os.environ["S3_BUCKET"]

iam_role = os.environ["IAM_ROLE"]

kms_key = os.environ["KMS_KEY"]

tables = os.environ["TABLE_LIST"]

tables = json.loads(tables)

client = boto3.client("rds")

get_latest_snapshot_name,get_latest_snapshot_time = get_db_snapshot()

if get_latest_snapshot_time.date() == datetime.today().date():

today_date = datetime.today().strftime("%Y%m%d")

export_task = "db-table-backup-"+today_date

response = client.start_export_task(

ExportTaskIdentifier=export_task,

SourceArn=get_latest_snapshot_name,

S3BucketName=s3_bucket,

IamRoleArn=iam_role,

KmsKeyId=kms_key,

ExportOnly=tables

)

return(json.dumps(response, default=jsondatetimeconverter))

else:

return("Not invoking start export task as the backup its not the latest backup.")

As I added “TABLE_LIST” to be environment variable, it took some time to figure out how to provide “list” of table names. In RDS console it is quite simple. You can provide multiple table separated by space, but the same was not working in lambda function. What worked for me was –

Added TABLE_LIST with value -- ["schema.table1","schema.table2"] In the lambda function -- tables = os.environ["TABLE_LIST"] tables = json.loads(tables)

Another issue which I encountered —

An error occurred (AccessDenied) when calling the StartExportTask operation: User: arn:aws:sts::xxxxxxxxxxxx:assumed-role/export-to-s3/export-to-s3 is not authorized to perform: rds:StartExportTask”,

After spending lot of time looking over and over again into the IAM Role, I figured out that the issue was in “Resource” where I had mentioned DB Cluster ARN. Changing it to “*” resolved the issue. Also make sure to have proper permissions for KMS (KMS ARN works).

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Stmt1581236532516",

"Action": [

"rds:DescribeDBClusterSnapshots",

"rds:DescribeDBClusters",

"rds:DescribeDBInstances",

"rds:DescribeDBSnapshots",

"rds:DescribeExportTasks",

"rds:StartExportTask"

],

"Effect": "Allow",

"Resource": [

"*"

]

}

]

}

Once the objects (parquet files) were available in the staging bucket of Account B, lambda function was triggered to initiate glue job to perform the required transformation, write the data (as new parquet files) into final S3 bucket and update the location for Athena tables.

References –